Artificial Intelligence (AI) has emerged as a transformative technology for financial institutions (FIs), reshaping how they generate revenue, deepen client engagement, optimize operations, and strengthen risk management.

At Nomura, we recognize AI as a strategic capability and have established dedicated AI Centers of Excellence (AI CoE) to enable AI for both the Japan and international businesses. Although AI, and particularly generative AI (GenAI), provides a number of opportunities, it also introduces risks that must be proactively managed. This report outlines some of the key AI risk typologies and recommends best practice governance that enables innovation while ensuring AI solutions are deployed responsibly, ethically, and comply with global regulatory standards.

Why AI Governance Matters

For decades, FIs have developed, utilized, and relied on AI, particularly in areas such as risk management, quantitative trading, and portfolio optimization. However, the rapid rise of GenAI has introduced further opportunities across different business lines and functions. In addition, there is also a growing awareness of AI among clients, regulators and industry participants with heightened expectations of realizing value through it.

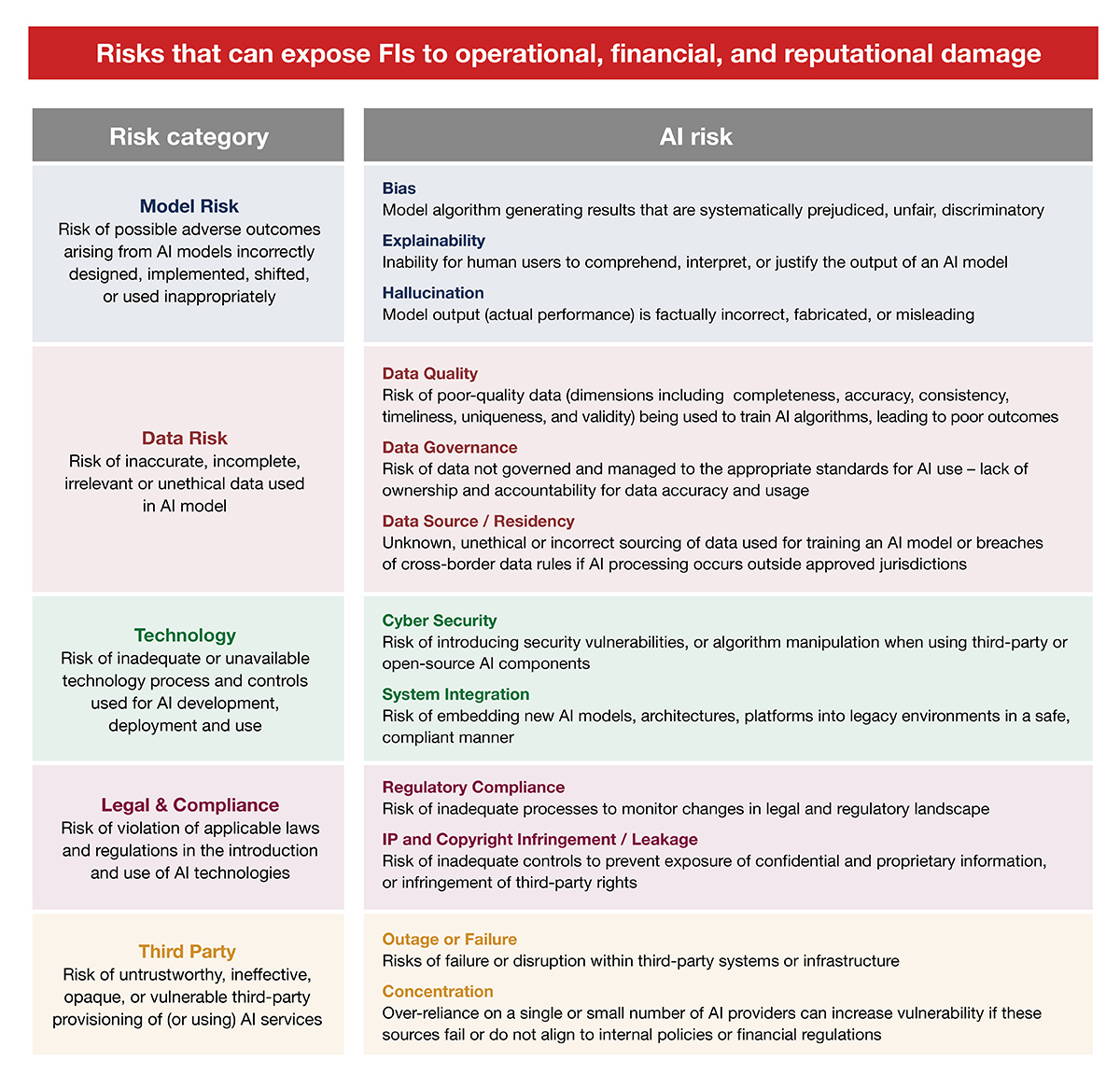

While AI opens new opportunities, it also impacts FI risk taxonomies by not only amplifying existing risks but also introducing new ones. These include challenges such as model complexity, interpretability, reliance on third-party providers and platforms, cross-jurisdictional compliance, and evolving rules and regulations.

Given the range of AI risks, stakeholders increasingly require assurance that these are being effectively identified, managed, and mitigated. As a result, many financial institutions are actively establishing AI governance structures to ensure responsible and compliant adoption. However, AI governance must be designed as an enabler rather than a constraint that slows down or restricts innovation.

Balancing Innovation with Compliance – Best Practice Considerations

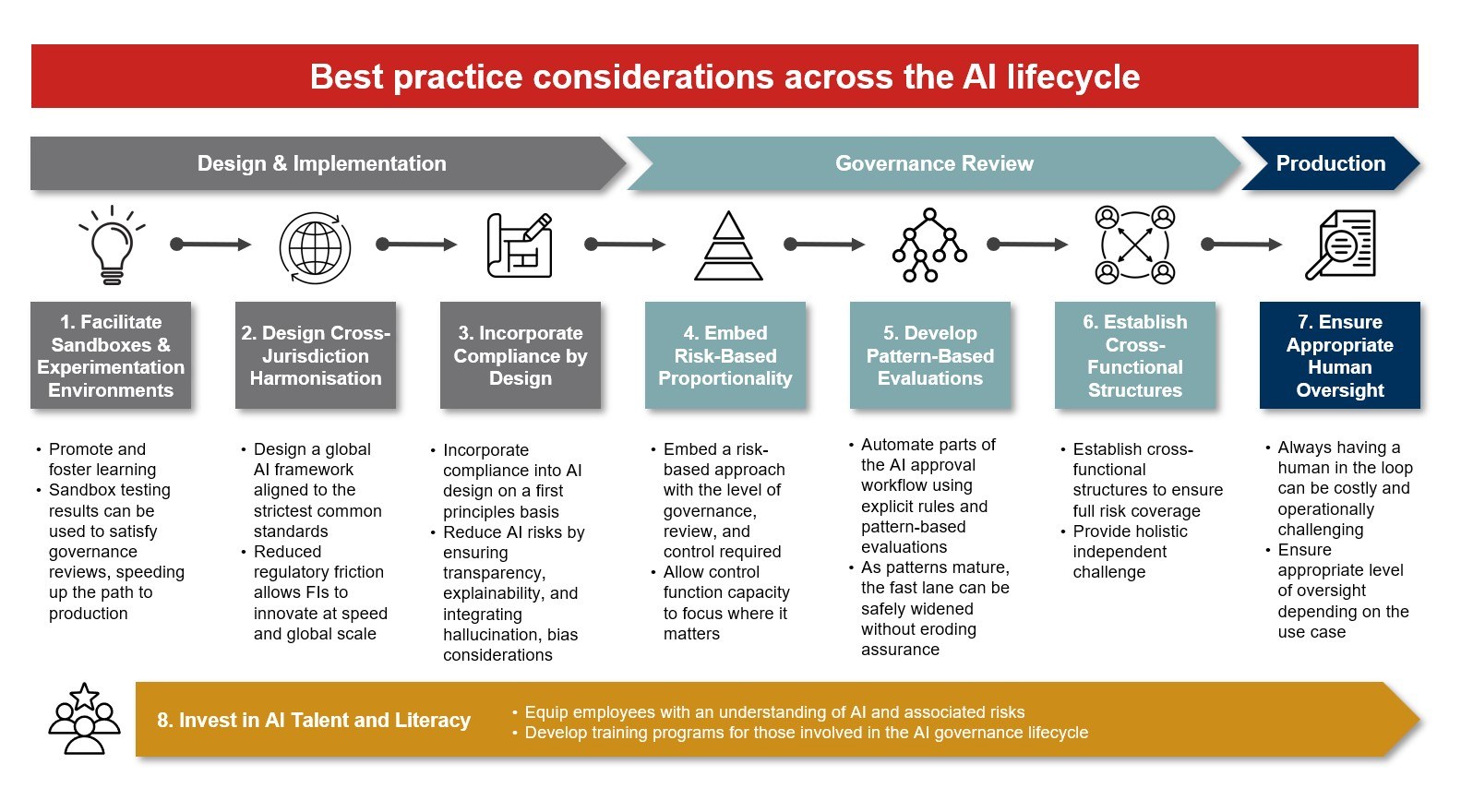

Drawing on our experience, the following are field-tested best practice recommendations for establishing appropriate AI governance, safety, guardrails, and controls that protect firms without constraining innovation.

1. Facilitate Sandboxes and Controlled Experimentation Environments

A sandbox is a structure in which innovators can test new technologies in a controlled environment without the risks and restrictions of full production deployment. These isolated environments can accelerate AI innovation by allowing development teams to explore, test and learn – effectively promoting and fostering learning.

Data access is where sandboxes save the most time. In a sandbox, sensitive data is masked, or synthetic data sets can be made available for early modeling without having to wait for data approvals or challenges with using certain data sets. Results from sandbox testing can also be used to satisfy governance and risk committees, speeding up the path to production. This controlled experimentation can act as a protective fast lane for AI deployment. Nomura also welcomes regulatory sandboxes as they can reduce the information gap between FIs and regulators and assist in creating and refining AI policy.

2. Design Cross-Jurisdiction Harmonization

The AI regulatory environment is evolving and there is a proliferation of enforcement and rules across markets. However, there are common threads and trends across AI regulations and guidelines including:

- Risk-tiered controls – applying a risk-based approach by defining a use case risk profile to determine appropriate control requirements

- Model governance and explainability – demonstrating AI model oversight, testing and interpretability

- Accountability – board and senior management being accountable with defined escalation paths

- Transparency obligations – informing users they are interacting with AI and disclosing when content is AI generated

- Threat prevention – conducting regular tests to maintain AI system integrity, robustness, and security

- Data quality provenance – AI systems being trained with high-quality, unbiased data; the data must have appropriate levels of governance and management controls

These commonalities allow for a pragmatic approach in designing a single firmwide governance framework aligned to the strictest application requirements with local addendums to meet regional obligations. This approach provides a level of harmonization and reduces regulatory friction for FIs to innovate confidently at speed and global scale – allowing banks to comply once, evidence many, and apply local portable assurance where required.

3. Incorporate Compliance by Design

In the same way an architect designs a bridge incorporating safety in the design, development teams should be encouraged to embed safety measures when developing AI solutions. Compliance by design requires incorporating compliance practices at the start of the AI design, on a first principles basis, and embedding it through the development lifecycle. This ensures that transparency, explainability, bias, and trust are integral parts of the AI solutions design, architecture, data, process, and model.

4. Embed Risk-Based Proportionality

Not every AI model carries the same level of impact or risk and therefore doesn’t require the same level of scrutiny and review. For example, higher risk use cases impacting clients or financial stability require intensive governance reviews and controls, while lower risk use cases can be governed with lighter, faster, but still effective controls. Tiering can be defined based on a number of factors including who will interact with the solution (internal or client facing), what type of data is used (confidential, personally identifiable information), and business criticality. A tailored agile approach to governance and adopting proportionality, while still ensuring all risks are mitigated effectively, allows FIs to accelerate innovation at pace without compromising safety.

5. Develop Pattern-Based Evaluations

FIs are seeing a rapid acceleration of use cases from experimentation and proof of concepts moving to full-scale development and deployment, creating a persistent production approval backlog. To keep pace, without diluting assurance, FIs can automate parts of the approval workflow using explicit rules and pattern-based evaluations – freeing control function experts to focus on higher risk or novel use case applications.

The following is an example Decision Model and Notation (DMN) style service that provides governance with pace by creating an effective approval fast lane for straight through processing approval:

- Triage – classify each use case risk level into a lane. For example, a fast lane for low-risk well-templated submissions, assisted lane for medium-risk cases requiring review, or committee lanes for high-risk or novel patterns requiring heightened review and scrutiny

- Decision – approvals are bound to explicit control evidence (risk classification, data lineage, testing results, human oversight approach, etc.)

- Learn (pattern-based evaluation) – a retrieval service mines past assessments and completed approvals to suggest likely controls and conditions for new use cases. Review outcomes continuously refine the pattern library, so decisions get faster and more consistent over time

6. Establish Cross-Functional Governance Structures

Reviewing and approving AI solutions into production cannot sit within a single team or discipline but requires experts across the organization including from data, operational risk, cyber security, legal, compliance, technology, and model risk. Establishing governance structures and forums with cross-functional participation, alignment, and support is key to ensuring a complete and holistic approach to managing AI risk. The collective must also ensure that operationalized AI solutions align with the financial institution’s risk appetite, policies and standards in a consistent and transparent manner.

7. Ensure Appropriate Human Oversight

Humans provide the ultimate safeguard between AI output and real-world impact. They can act as a control against risks such as bias and hallucination while also providing ethical reasoning and intuition. The level of human oversight can be summarized across the following three models:

- Human in the Loop (HITL) – a human is always directly involved in reviewing and approving output of an AI model before taking any action

- Human on the Loop (HOTL) – a human supervises the AI model’s output but does not review or approve all output. They monitor performance and only step in where they find anomalies, model drift, or risks

- Human out the Loop (HOOTL) – the AI acts autonomously with minimal or no human oversight or intervention

Always having a human in the loop to review all AI model output and decisions can be costly, operationally challenging, and lead to bottlenecks and scalability issues. Establishing the appropriate level of human oversight is critical to ensuring AI systems are both safe and operationally efficient.

8. Invest in AI Talent and Literacy

Prioritizing change management and workforce readiness because adoption often fails without trust and training. Firms must invest in AI literacy programs and continuous learning, to equip employees at all levels with the knowledge to engage with AI responsibly. Functional domains cannot rely solely on subject matter expertise within their verticals, but also need AI expertise to bridge the gap between AI implementation and compliance obligations. This cross-skill capability reduces compliance paralysis, where uncertainty slows innovation, and enables faster, more confident, and responsible deployment of AI solutions.

Nomura’s AI Governance Framework – A Strategic Advantage

At Nomura, speed and safety aren’t opposing forces but complementary capabilities that, when properly balanced, create a sustainable competitive advantage. Our frameworks allow us to innovate with confidence, scale responsibly, and continue to earn the trust of our clients, regulators, and the wider financial services ecosystem. We monitor guidance from global regulatory bodies to ensure proactive compliance and that our frameworks are aligned with leading global standards including Monetary Authority of Singapore’s (MAS’s) Fairness, Ethics, Accountability and Transparency (FEAT)

principles in the use of AI, the MAS Artificial Intelligence Model Risk Management information paper, the EU AI Act, and other emerging regulatory and industry guidelines. By embedding AI governance into every stage of the AI development lifecycle and deployment, Nomura is well-positioned to shape the future of AI in financial services – ensuring it is controlled, ethical, responsible, and trustworthy.